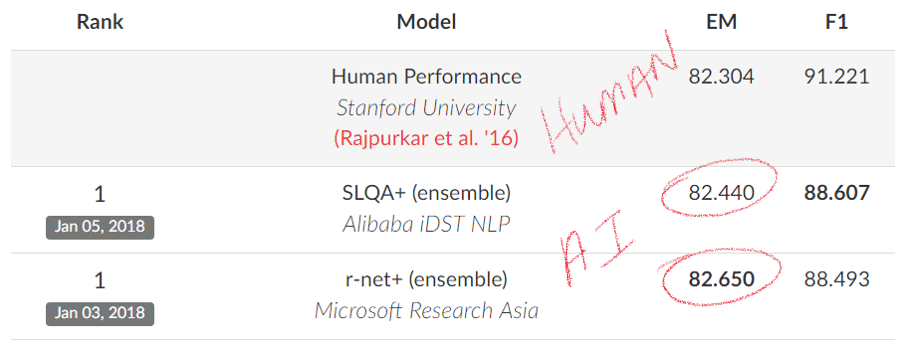

In case you didn’t notice, natural language processing made a huge leap forward last week, as an AI application beat a human in a reading comprehension test for the first time ever. The Stanford Question Answering Dataset (SQuAD), which uses crowdsourced approach to approximate a human level of reading comprehension, was surpassed by Microsoft on January 3rd, then by e-commerce giant Alibaba on January 5th.

The SQuAD comprehension test is a set of over 100,000 question/answer pairs, based on 500 Wikipedia articles. Alibaba and Microsoft’s scores are a big deal because, up to this point, no computer has been able to answer questions as well as the crowdsourced human equivalent developed by Standard (which in all fairness favors computers over humans).

As I wrote late last year, there is a general consensus amongst enterprise customer support executives that chat/conversational AI is ready for prime time. For example, a European call center executive told us recently: “Two years ago this was a technology, not a product. Today it’s a product that can solve business problems.” Customer support leaders of all kinds are sensing that now is the time to invest in conversational AI technology; if they don’t, they risk falling behind competition in the race for a better, faster and more cost-effective omni-channel experience.

But, even with this incredible advancement in natural language AI, there’s still a big problem: understanding context. A computer’s ability to transcribe what you say has improved dramatically – think about how well Siri can type what you say – but there is still a considerable chasm between understanding what you say and understanding what you mean.

For example, think about the following phrase: “We saw her duck.” This is an amazingly ambiguous phase when you think about it. It could mean:

- We saw someone’s bird.

- We saw someone bend down to avoid something.

- We cut a bird with a saw.

- Someone named “We” saw a bird.

This example is used across a number of academic papers about machine learning as evidence of the ambiguity inherent in our language. Understanding what was actually meant here is called “semantic representation”, which is where the heavy lifting of natural language comes in. Understanding requires either training machine-learning models or a lot of knowledge engineering, or both. But if you don’t do the heavy lifting, you will have a failed customer interaction, which is deadly in today’s customer-centric environments where switching costs are nearing zero.

So, if you’re thinking about adding chatbots or virtual agents to your internal or external customer support delivery model, remember that recognizing voice utterances or written text is pretty easy at this point. What’s hard is understanding what your customers really want, and how to take them on journey that actually feels human to them.